Google Indexing of Trac Repositories

06 Sep, 2009

There has been a Trac repository available on trac.cipherdyne.org

since 2006, and in that time I've collected quite a lot of Apache log data. Today, the

average number of hits per day against the fwknop, psad, fwsnort, and gpgdir Trac

repositories combined is over 40,000. These hits come mostly from search engine

crawlers such as Googlebot/2.1, msnbot/2.0b, Baiduspider+,

and Yahoo! Slurp/3.0;. However, not all such crawlers are created equal. It

turns out that Google is far and away the most dedicated indexer of the

cipherdyne.org Trac repositories, and to me this is somewhat surprising considering

the reputation Google has for efficiently crawling the web. That is, I would have

expected that the non-Google crawlers would most likely hit the Trac repositories

on average more often than Google. But perhaps Google is extremely interested in

getting the latest code made available via Trac indexed as quickly as possible, and

for svn repositories that are made accessible only via Trac (such as the

cipherdyne.org repositories), perhaps brute force indexing of all possible links in

Trac is better. Or, perhaps the other search engines are simply not as interested

in code within Trac repositories so they don't bother to aggressively index it, or

maybe they are just not very thorough when compared to Google.

There has been a Trac repository available on trac.cipherdyne.org

since 2006, and in that time I've collected quite a lot of Apache log data. Today, the

average number of hits per day against the fwknop, psad, fwsnort, and gpgdir Trac

repositories combined is over 40,000. These hits come mostly from search engine

crawlers such as Googlebot/2.1, msnbot/2.0b, Baiduspider+,

and Yahoo! Slurp/3.0;. However, not all such crawlers are created equal. It

turns out that Google is far and away the most dedicated indexer of the

cipherdyne.org Trac repositories, and to me this is somewhat surprising considering

the reputation Google has for efficiently crawling the web. That is, I would have

expected that the non-Google crawlers would most likely hit the Trac repositories

on average more often than Google. But perhaps Google is extremely interested in

getting the latest code made available via Trac indexed as quickly as possible, and

for svn repositories that are made accessible only via Trac (such as the

cipherdyne.org repositories), perhaps brute force indexing of all possible links in

Trac is better. Or, perhaps the other search engines are simply not as interested

in code within Trac repositories so they don't bother to aggressively index it, or

maybe they are just not very thorough when compared to Google.

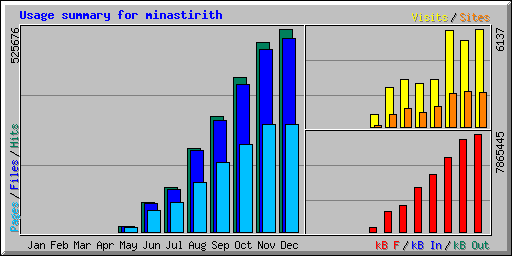

Let's see some examples. The following graphs are produced with the webalizer project. This graph shows the uptick in hits against Trac shortly after it was first made available in 2006:

So, the average number of hits goes from 803 starting out in May and jumps rapidly

to nearly 17,000 in December. Here are the top five User-Agents and associated hit

counts:

So, the average number of hits goes from 803 starting out in May and jumps rapidly

to nearly 17,000 in December. Here are the top five User-Agents and associated hit

counts:

| Hits | Percentage | User-Agent |

| 5062 | 37.06% | Mozilla/5.0 (compatible; Googlebot/2.1; +http://www.google.com/bot.html) |

| 5004 | 36.63% | noxtrumbot/1.0 (crawler@noxtrum.com) |

| 1283 | 9.39% | msnbot/0.9 (+http://search.msn.com/msnbot.htm) |

| 475 | 3.48% | Mozilla/5.0 (X11; U; Linux i686; en-US; rv:1.7.13) Gecko/20060418... |

| 457 | 3.35% | Mozilla/5.0 (X11; U; Linux x86_64; en-US; rv:1.7.12) Gecko/20051229... |

Right off the bat Google is the top crawler, but only just barely. In December, this changes to the following:

| Hits | Percentage | User-Agent |

| 478063 | 90.94% | Mozilla/5.0 (compatible; Googlebot/2.1; ... |

| 11299 | 2.15% | Mozilla/5.0 (compatible; BecomeBot/3.0; ... |

| 8287 | 1.58% | msnbot-media/1.0 (+http://search.msn.com/msnbot.htm) |

| 6423 | 1.22% | Mozilla/5.0 (compatible; Yahoo! Slurp; ... |

| 4029 | 0.77% | Mozilla/2.0 (compatible; Ask Jeeves/Teoma; ... |

Now things are drastically different - Google's crawler now accounts for over 90% of all hits against Trac, and has created over 42 times the number of hits from the second place crawler "BecomeBot/3.0" (a shopping-related crawler - maybe they like the price of "zero" for the cipherdyne.org projects).

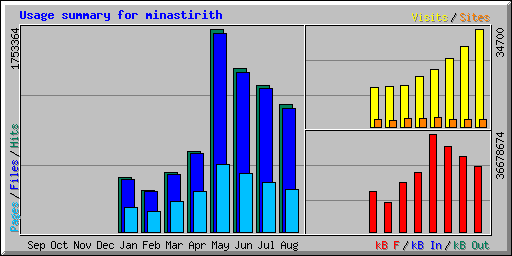

Let's fast forward to 2009 and take a look at how things are shaping up (note that data from 2008 is not included in this graph):

The month of May was certainly an aberration with over 56,000 hits per day, and August

topped out at 42,000 hits per day. In May, the top five crawlers were:

The month of May was certainly an aberration with over 56,000 hits per day, and August

topped out at 42,000 hits per day. In May, the top five crawlers were:

| Hits | Percentage | User-Agent |

| 1510435 | 86.14% | Mozilla/5.0 (compatible; Googlebot/2.1; +http://www.google.com/bot.html) |

| 73740 | 4.21% | Mozilla/5.0 (compatible; DotBot/1.1; http://www.dotnetdotcom.org/ |

| 33482 | 1.91% | Mozilla/5.0 (compatible; Charlotte/1.1; http://www.searchme.com/support/) |

| 22699 | 1.29% | Mozilla/5.0 (compatible; Yahoo! Slurp/3.0; ... |

| 20980 | 1.20% | Mozilla/5.0 (compatible; MJ12bot/v1.2.4; ... |

Google maintains a crawling rate of over 20 times as many hits as the second place crawler "DotBot/1.1". In August, 2009 (some of the most recent data), the crawler hit counts were:

| Hits | Percentage | User-Agent |

| 947703 | 86.02% | Mozilla/5.0 (compatible; Googlebot/2.1; +http://www.google.com/bot.html) |

| 43876 | 3.98% | msnbot/2.0b (+http://search.msn.com/msnbot.htm) |

| 30951 | 2.81% | Mozilla/5.0 (compatible; DotBot/1.1; http://www.dotnetdotcom.org/ |

| 11578 | 1.05% | Mozilla/5.0 (compatible; Yahoo! Slurp/3.0; ...) |

| 10880 | 0.99% | msnbot/1.1 (+http://search.msn.com/msnbot.htm) |

This time "msnbot/2.0b" makes it to second place, but it is still far behind Google in terms of hit counts. So, what is Google looking at that needs so many hits? One clue perhaps is that Google likes to re-index older data (probably to ensure that a content update has not been missed). Here is an example of all hits against a link that contains the "diff" formated output for changeset 353 in the fwknop project. The output is organized by each year since 2006, and the first command counts the number of hits from Google, and the second command shows all of the non-Googlebot hits:

[trac.cipherdyne.org]$ for f in 200*; do echo $f; grep diff $f/trac_access* |grep "/trac/fwknop/changeset/353" | grep Googlebot |wc -l; done

2006

6

2007

4

2008

6

2009

2

[trac.cipherdyne.org]$ for f in 200*; do echo $f; grep diff $f/trac_access* |grep "/trac/fwknop/changeset/353" | grep -v Googlebot ; done

2006

74.6.74.211 - - [10/Oct/2006:07:38:21 -0400] "GET /trac/fwknop/changeset/353?format=diff HTTP/1.0" 200 - "-" "Mozilla/5.0 (compatible; Yahoo! Slurp; http://help.yahoo.com/help/us/ysearch/slurp)"

205.209.170.161 - - [19/Nov/2006:14:17:57 -0500] "GET /trac/fwknop/changeset/353?format=diff HTTP/1.1" 200 - "-" "MJ12bot/v1.0.8 (http://majestic12.co.uk/bot.php?+)"

2007

2008

38.99.44.102 - - [27/Dec/2008:17:51:34 -0500] "GET /trac/fwknop/changeset/353/?format=diff&new=353 HTTP/1.0" 200 - "-" "Mozilla/5.0 (Twiceler-0.9 http://www.cuil.com/twiceler/robot.html)"

2009

208.115.111.250 - - [14/Jul/2009:20:42:32 -0400] "GET /trac/fwknop/changeset/353?format=diff&new=353 HTTP/1.1" 200 - "-" "Mozilla/5.0 (compatible; DotBot/1.1; http://www.dotnetdotcom.org/, crawler@dotnetdotcom.org)"

208.115.111.250 - - [28/Jun/2009:10:00:32 -0400] "GET /trac/fwknop/changeset/353?format=diff&new=353 HTTP/1.1" 200 - "-" "Mozilla/5.0 (compatible; DotBot/1.1; http://www.dotnetdotcom.org/, crawler@dotnetdotcom.org)"

So, Google keeps hitting the "changeset 353" link multiple times each year, whereas

the other crawlers (except for DotBot) each hit the link once and never came back.

Further, many crawlers have not hit the link at all, so perhaps they are not

nearly as thorough as Google.

A few questions come to mind to conclude this blog post. Please contact me if you would like to discuss any of these:

- For any other people out there who also run Trac, what crawlers have you seen in your logs, and does Google stand out as a more dedicated indexer than the other crawlers?

- For anyone who runs Trac but also makes the svn repository directly accessible, does Google continue to aggressively index Trac? Does the svn access imply that whatever code is versioned within is used as a more authoritative source than Trac itself?

- It would seem that any crawler could implement an optimization around the Trac timeline feature so that source code change links are indexed only when a timeline update is made. But, perhaps this is too detailed for crawlers to worry about? It would require additional machinery to interpret the Trac application, so search engines most likely try to avoid such customizations.

- Why do the non-Google crawlers lag so far behind in terms of hit counts? Are the differences in resources that Google can bring to bear on crawling the web vs. the other search engines so great that the others just cannot keep up? Or, maybe the others are just not so interested in code that is made available in Trac?